The New Cybernetics: Systems Thinking for the 21st Century

In this interview with Leslie Broudo, Australia National University’s Prof. Genevieve Bell discusses the history of cybernetics and how she is leading the challenge of reimagining the possibilities of the field for the 21st Century and beyond. As Head of The School of Cybernetics, based in the College of Engineering and Computer Science, Bell emphasizes a transdisciplinary approach that considers people, technology, and the environment in order to ultimately help build products that ultimately assimilate divergent voices and perspectives.

Coller Venture Review —

You are off to an audacious next part of your journey. Tell us first, how did you get your start?

Genevieve Bell —

When I was a child we spent a lot of time moving around. My mom told us we had to make the world a better place, more fair and more just. She told us we had a moral obligation, to get in the room where the decisions were being made. She told us that if you have a voice, you have to make it count for others – it was a sense of service – again, the notion of a moral obligation. “Do work that matters,” she said. “And not just good for you but good for others, including others that don’t have the access you do.”

I’ve been lucky and I’ve worked really hard to make that luck. If you’re in those rooms, you have to make a difference….to ensure that the technologies we build don’t stop us from being who we are. We have to make sure that the technology we build is not technology built with just one view of the world.

CVR —

Can you tell us about the new School of Cybernetics, and your view on how it fits within the changing technology, business, and social context globally?

Bell —

Over the last 23 years, I’ve been in and out of Silicon Valley, where people have been actively building the future, the world we now live in. As I have been in those conversations, and those imaginations of the world, it’s always been clear to me that we need a more contested, messy vision of the future, that the vision should not feel so neat and tidy.

The Media Lab did well for a long time, and I feel I have a responsibility now to tell stories about the future, and to do things in the present that cause that future. So over the last 4–10 years, the conversations we had about big data became the conversation about the cloud and then about AI. The energy has been the same for the last decade. And a lot of it is the view of the future. And a set of technologies that will change the world. But then you also have to actively disrupt the present to make those stories possible.

At the School of Cybernetics, we don’t want to intervene exactly, but to contain some of the energy, to ask what is the future that is being imagined here? How is that data being used? What are the inherent biases and limitations of that data and other worlds we’re imagining with it?

At some point, we also need to create people who are better equipped to handle those conversations. Because it’s not just the AI piece of the puzzle. It’s the whole system. And it’s what happens when AI starts to get inside things – whether it’s elevators, or trains, or the electrical grid, or our bodies. I don’t think it’s computer scientists or electrical engineers only.

I feel I have a responsibility now to tell stories about the future, but to do things in the present that cause that future

CVR —

What is your approach to tackling such big questions?

Bell —

I feel critically aware that the whole system feels like a unit of analysis, like a critical theoretical unit by which we should make sense of things. As part of this sense making, I have found my way back to a set of conversations that took place in the 1940s and 1950s, including the Macy Conferences that started in 1946. This gathering brought together a group of thinkers from all over the world regularly over the next several years. They debated the future and tried to work out how the power of computing could be managed in such a way that it wasn’t used to create more of the destruction that had been witnessed in World War II. They wanted to build a different future than the present they found themselves in. They had a series of conversations about what the future might be like, about what it meant to have humans and computers co-exist, what the relationship between them might be. And that whole conversation unfolded under the banner of cybernetics, which was at that point the theory of control and communication of computing and humanity and the broader ecological systems. These turned out to be the most generative conversations of the 21st century. They were conversations that spawned artificial intelligence because the same practitioners who were in the cybernetics conversation eventually spin off and go build the AI discourse. A bunch of the other practitioners go off and build out most of Silicon Valley. Others go off and create most of the work around organizational development in Britain while go on to create computational art. It turns out if you scratch the surface of the most interesting people in the second half of the 20th century, underneath you will find a founding cybernetician.

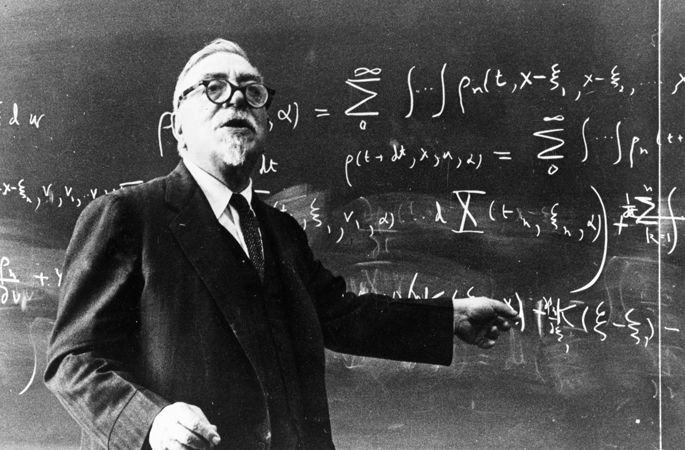

Norbert Wiener, considered to be the originator of cybernetics.

Credit: Courtesy MIT Museum

CVR —

You’ve talked a lot about power in a diversity of voices. Can you tell us a bit more?

Bell —

History tells us that among the many lessons we can draw from cybernetics aren’t just about a theory, it’s also about the power there is in a diversity of voices – it gets you to productive discomfort. There is power in a conversation that unfolds over weeks and months and years, not hours. As it takes a while to get to good idea – thinking that we’re going to get it done in an afternoon is foolish actually. I think there’s power in ideas that have a certain kind of grace to them – that they hold their form enough that people can find their way, but they’re not so structurally set that people can’t go and reinterpret them.

The people in 1946 who gathered together over many years to discuss cybernetics helped shape our future. They created an idea that would endure. It didn’t have to be so rigidly determined that other people couldn’t take it and do something interesting with it. And for me, that’s about a certain idea having grace – an idea that holds but not so much that it excludes other people from it.

CVR —

What keywords do you want us to think about when we talk about data science? What changes as a result?

Bell —

The thing about data is that it’s always retrospective – you’re looking at what has already happened, not what will happen. And it is, as a result, in some ways, both conservative and confining. If data is always in the past, and always retrospective, and you are modeling the future, based on that, it creates some really interesting challenges. So it is about how do we think about information and asking ourselves a whole series of questions. How do we think about information architecture? How do we think about both the way data is created and managed? Now the thing about statistics is that if you look at its history you will find that eugenics is tied up very tightly with it, which is deeply troubling. But how do we teach people to recognize what is data? How data is created? How data can then be managed and manipulated? Not in the cynical sense but, ultimately, how you extract value out of data – whether that value is in terms of sense making, or prescriptive activities. Ultimately, we have to start to ask better questions about what gets made into data and what doesn’t.

CVR —

You talk about teaching your students to ask questions that probe “a step up.” What do you mean by this?

Bell —

Taking a step up is about pushing further on the ‘why’ and asking questions on the intentionality of something. If I give an example where you’re developing an app that will help me buy a sweater to go with my pants, I want to know what the intention of the app is. And sometimes we’re not good at pausing to really answer that question. Asking the right questions it about imagining a world of fast fashion and a world of just-in-time supply chain. You are imagining a world of credit cards, you are imagining a world of data trails, you are imagining a world of multiple other systems, you’re imagining a world of desire, you’re imagining a world where matching makes sense.

And so I think one of the things we aren’t good at is pausing to ask the question, what is this world? And what is the world that this object, in its making, will help bring into existence? And is that a world we really think is a good idea? And it’s very hard sometimes for people to stop and think about that and about what the consequences will be of this app coming to fruition.

CVR —

What does it mean to think about the kind of the nonhuman piece of the world that is also still biological?

Bell —

I grew up in a world of psycho-demographic segmentation and behavioral-based segmentation. And I often wonder if, in our desire to put everything into little neat, tidy boxes, we’re also missing something. So it is not just a more robust discourse about a bigger world, Our conversational landscape and worldview needs to be slightly more expansive. It’s simply the fact that we know we need to have other conversations about the world that we inhabit that isn’t just us and the walls. We have to make a more complicated space for ourselves.

It’s simply the fact that we know we need to have other conversations about the world that we inhabit that isn’t just us and the walls. We have to make a more complicated space for ourselves

CVR —

You talk about helping to lead the future by bringing technology and people together in new ways. Can you comment on your underlying optimism?

Bell —

I think a lot of it is about how do we do a better job of telling stories about the future. We tell these ridiculous stories about how everything’s going to be different. And then it really isn’t. Starting with Frankenstein about 200 years ago we have told really compelling stories about what happens when humans use technology to do the work of gods – generally nothing good will come of it. And those narratives have a very particular kind of resonance – it’s easy to tell stories about how things will go badly. It’s easily to tell the dystopian science fiction stories where AI is this singular monolithic thing that takes over. I feel like part of the work we have to do is tell more complicated stories about technology, where they’re not singular in their valence, i.e., no technology is going to be universally good or bad – there are going to be complications.

And yes, we’re going to have to think about regulation. And yes, we’re going to have to think about how we manage the supply chain. And we’re going to have to do this inside some constraints. But can I imagine a world in which there are a range of technical possibilities, some of which are excellent for us?

Well, of course, I can. But I tend to be less interested in that than I am about how we need to build the future we want to live in. So for me, sitting now inside a university, my imagination goes to how do I educate the next generation of citizens and develop a new type of engineer – so that they know how to ask the right questions, ones that I hope are richer, to help shape our future.

CVR —

What advice do you give your students when they graduate?

Bell —

I quote one of my bosses. I tell them that curiosity is the greatest form of insubordination. I tell them that being the person that asks all the questions all the time is never easy and that you have to be willing to be brave. I tell them, they’re taking on a life where you are going to be the person who convenes conversations that don’t end easily. But that it’s good work. I tell them that it will sometimes be exhausting. I tell them that it will sometimes be really fun. I tell them there’ll be days where they think I just can’t do it anymore. All they want to do is eat chocolate, watch bad TV and buy shoes on the internet. And I tell them, that’s all okay. I tell them that the reason we built the program was that they never have to feel like they were alone in our journey, that there’s always going to be someone else who came to this program with them. And that, you know, all they have to do is find a way to find that other person and be reminded that, yeah, it’s hard, but it’s still the right thing to be doing.

And I tell them that every day is going to be different than the one before. I remind them that it’s hugely important to celebrate the wins and create rituals for that, that they need to periodically find time to catch up to themselves. And that they also have an obligation to go build more places where the conversation is possible. And then I tell them, they’re always welcome back in the building wherever I am.